Markov's Next Tweet

21 Apr 2011Have cookie. All is a little intrusive So close to be Everyone is we haven't blogged in mall bathroom -!If you've ever taken the time to read spam - and you really should - you might have come across something like this; text that almost seems human, but on closer inspection just doesn't quite make sense.

Markov Chains

I've mentioned Markov Chains before, for example, in the Snakes and Ladders post. Here's the formal definition from Wikipedia,

A Markov chain, named for Andrey Markov, is a mathematical system that undergoes transitions from one state to another (from a finite or countable number of possible states) in a chain-like manner. It is a random process endowed with the Markov property: the next state depends only on the current state and not on the past.In the context of snakes and ladders, this basically says that the square you'll land on in the next turn depends on the square you're currently on, and the roll of a dice.

What the Dickens?

So what does this have to do with text generating?

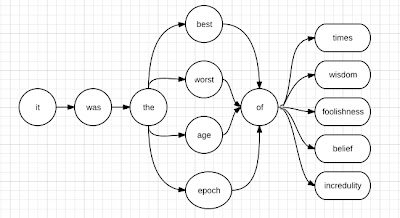

Here's a visual example of how it works. These are the first few lines from A Tale of Two Cities as a flowchart,

In the above, you have the lines broken up word-wise, and linked by which words follow each other. To generate a sentence, pick a starting point and follow the arrows. When you get to a junction pick which way you go at random.

So for text generation, your 'current state' will be some word - say, 'of' in the above. The Markov text generator then picks a word to follow it. In the above, there are 5 words that could follow 'of', each with an equal chance of being chosen; except for 'times' which is twice as likely as the others, since it appears twice in the source.

It should be noted that it isn't just shuffling the words completely at random - words are chosen based on what they appear together with in the source.

Tweets Go In, Gibberish Comes Out

By feeding different source texts in to a generator, you can get all variety of different results; to some extent matching the style of the source writer. This is what can make the output seem so human, while being so beautifully nonsensical.

In fact, the quote at the very top of the post was generated by "That Can Be My Next Tweet".

The only explanation the site gives as to how it works is,

This page generates your future tweets based on the DNA of your existing messages.But it seems safe to assume, from that and what it outputs, that it's using Markov text generation with your - or in this case my - previous tweets as the source text.

What if I don't really is

He opened the door and got into the car engine shuddered into life and the vehicle lurched down the driveway..That bit of text is actually an extract from "How to write badly well: Forget what you're doing halfway through a sentence", and is human written. But it demonstrates the point well.

In the above text, you have two sentence fragments either side of 'the car'; each makes sense individually, but not when they're put together.

And unfortunately, these sudden changes of direction are a major trip up point for Markov text; especially since this sort of thing can happen multiple times in a single sentence.

Letters and Words

You don't have to chop up the source text word-wise.

You could, for example, run a Markov chain letter-wise (or even by groups of letters).

This is particularly good at creating bizarre, new, portmanteau-ish words. For example, if your source is the US states, you get such gems as - Floridaho, Oklabama, and Flork.

The drawback to this approach is that it's no good at sentences, since what you'll get is likely to be a nonsensical collection of made-up words.

Alternatively, you could work with pairs of words, or indeed n-grams of any size. This has the benefit of creating more readable-text, but at the cost of variation.

Similarly, a smaller source text can produce lots of sentence fragments that never vary, while a large source can will give greater randomness.

It all ultimately comes down to getting a desirable balance between variability and comprehensibility.

Stuff to Play With

I threw together this quick and dirty implementation in python - mostly just to show that I could. I ran it on the first few paragraphs of this post (pre-editing), and got this as an example output,

almost seems human but while no-one is going on some spam was text that has anyone really should you might have postulated that has anyone reallyThat Can Be My Next Tweet, mentioned above.

On TweetCloud, you can enter a word and get a word cloud of words that commonly follow that word in tweets. Word.

Word-O-Matic, also mention above, creates words based on any source text you give it. See also: associated Reddit thread with lots of example results.

Markov Text Synthesizer, a general online generator you can play with.

There are various Twitter-bot attempts here.

Markov Shakespearean Sonnet (uses a slightly more complex generation method)

Someone who explains it better than me.

Oh, and you can also use Markov Chains to generate music.

Enjoy.

Oatzy.

[Spam is weirdly hard to come by these days.]