The Oscars Vs. Rotten Tomatoes

28 Jan 2011 What Do Critics KnowThis is something I looked at briefly last year. I wondered if there was any correlation between Rotten Tomatoes score - a score based on an aggregate of critic' reviews - and winning an Academy Award for Best Picture. That is, is the highest rated of the nominees for a given year most likely to win?

The short answer is no.

Data Mining

For those interested, the data was collected like this.

First, the list of nominees and winners is copied from Wikipedia. The data is then imported into Google Refine to be tidied up and sorted. Then, still in Refine, the list is reconciled - that is, each film on the list is linked to the appropriate page on FreeBase.

Having been reconciled, extra data can then be pulled from FreeBase; and with a bit of extra coding, the Rotten Tomatoes scores can also be mined.

NB/ some scores had to be input by hand, some scores are unavailable, and there may (but not necessarily) be errors.

You can look at the data here.

Analysis

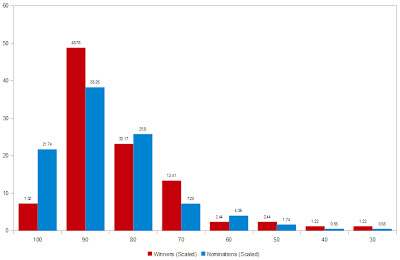

First of all, I looked at the distribution of scores for winners vs. Nominees

If there were some correlation, you'd expect the winners to be, generally, further to the left than the other noms. But looking at the graph, there's hardly any significant difference. In fact, the proportion of nominees with a score of 100 is greater than that of the winners.

NB/ the numbers were scaled to matching sizes.

I also looked at the number of winners that were the highest rated in their year - this works out at 22%. Which isn't amazing (a little over one fifth). And on top of that, the number of winners that were the lowest rated in their year works out at 18.3% (a little under one fifth).

So as I said in the short answer, there's not much of a correlation.

I also tried working with only the films since 1970, since most of the unavailable scores were for films prior to then. But the results were effectively the same.

Audience Knows Best

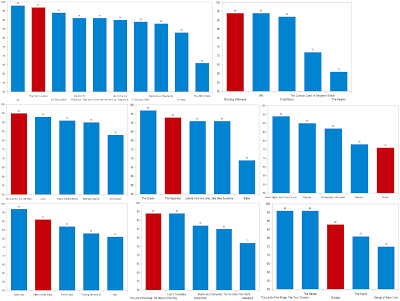

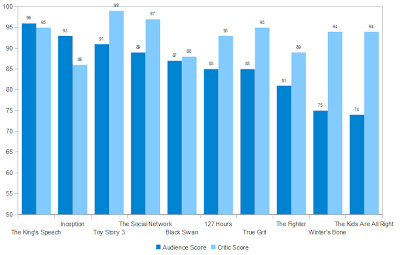

I created a few year-wise graphs, such as below (2009-2002)

and looking at them only confirmed the weak correlation between score and winning.

But one in particular stood out - 2005. In 2005, Crash won the award despite being the lowest rated of the nominees. But I'll come back to that.

The other one that got me thinking was 2008 - both Slumdog Millionaire and Milk got a rating of 94.

But it occurred to me that, maybe the reason Slumdog won was because it was more appealing to a wider audience. Think about it; Slumdog is a love story, whose protagonist overcoming adversity and against all odds, gets a happy ending. Milk is a biopic about a politician and gay rights activist who is assassinated. Both are top quality films, but Slumdog has wider appeal. And maybe that's important.

Granted, I'm exaggerating, a little, the weight of the effect of wider appeal for the sake of making my point. But you get the idea.

You have to bear in mind that the Academy Award for Best Picture isn't voted for by a handful of people with a potentially homogeneous taste - it's voted for by a comity of over 5,000 people. So having that wider apeal is going to help.

This would also explain why genre films tend to struggle.

Luckily, Rotten Tomatoes also has an "Audience" rating - a score out of 100, based on audience interest. And sure enough, Slumdog gets a score of 90, versus Milk with 88. Granted, it's a small difference, but it gives some credence to the theory.

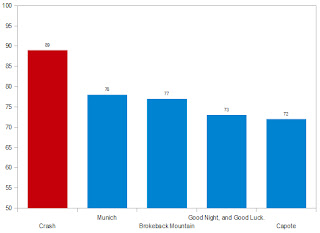

So going back to 2005, I checked the Audience scores,

and indeed Crash was the highest audience rated.

Caveats

Firstly, Audience scores fall into two variations - "want to see/not interested", or "liked/disliked". Generally, the older films are in the former category, while the newer are in the latter.

And each of these have their own potential problems.

For the first, the problem is causality - did a film get the Oscar because a because of the wider interest, or are people interested in seeing it because it won an Oscar?

There are also psychological consideration. People are weird; when a film gets a generally strong (or poor) response from critics, this can influence people's opinions - some people like to hate things because everyone loves them. Some people are just influenced by their peers' opinions.

But these aren't really things we can test or adjust for.

The Audience score is also going to be loosely related to the critic score, in as much as they both relate, to some extent, to the films 'quality'.

Statistically Wiser

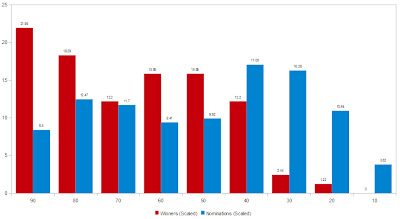

So as before, we have the score distribution

And, this is more what you'd want to see - the winners having a stronger tendency towards higher scores than the nominees.

Similarly, you can work out how many of the winners were highest rated in their year - in this case 36.6%; double the number for critic score. Similarly, the number ranked lowest in their year drops to 7.3%

And together, this supports the idea that there's a stronger skew towards higher scores, and thus that audience opinion is a better indicator of Oscar winners than critical opinion.

Of course, the better predictive power from the audience score could just be an example of wisdom of the crowd.

Obviously, this still isn't 100% predictive power, or even 50%. So it's far from perfect. I also tried various ways of combining both scores, but wasn't able to significantly improve on the results for audience scores alone.

Prediction 2011

Based on all this, I'd say the smart money for this year would be The King's Speech.

While it's only joint-third highest rated of the year according to the critics, it has the highest audience score of the nominees.

But then, everyone's expecting it to win anyway.

We shall have to wait and see...

Oatzy.